The 2024 Advent of Code has wrapped up, and it's another joyful experience of puzzle solving and coding. But if your goal is to get onto the global leaderboard, then this year might have felt very different.

The rise of AI coding tools has changed the workflow of many developers. In 2023, we already had ChatGPT and Copilot. This year, new models came out (such as Claude Sonnet 3.5), bringing more powerful coding capabilities to the table. Motivated developers can also directly use LLM APIs to build custom, automated puzzle solvers. While I have always been impressed by how fast people can solve AoC puzzles, this year, when capable humans are collaborating with capable computers, the result can be quite jaw-dropping.

| Year | 1st place time (day 1 part 1) |

|---|---|

| 2015 | 05:38 |

| 2016 | 03:57 |

| 2017 | 00:57 |

| 2018 | 00:26 |

| 2019 | 00:23 |

| 2020 | 00:35 |

| 2021 | 00:28 |

| 2022 | 00:29 |

| 2023 | 00:12 |

| 2024 | 00:04 |

For the first puzzle of 2024, the fastest submission took only 4 seconds! That's faster than I can type print("Ans:", ans), let alone actually reading the problem.

This got me curious: how have solve times changed over the years, and is there any discernible speed-up in 2024?

Solve Time by Year Analysis

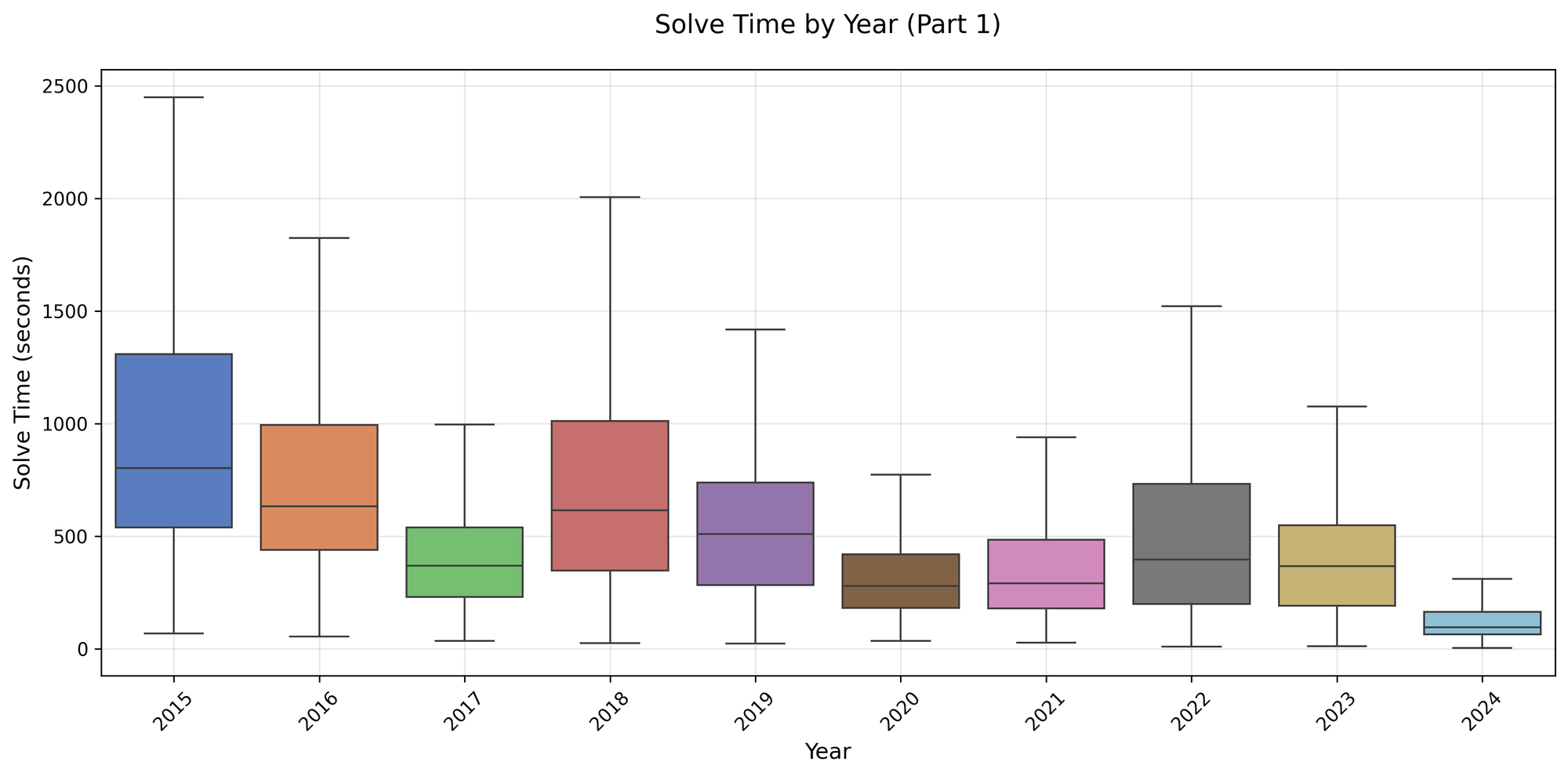

So we gathered the global leaderboard data directly from Advent of Code. For each year, on each day, we get the solve time for each coder on the leaderboard (top 100 solvers). We did this for both part 1 and part 2 of the puzzle. That means each year, there are 100 * 25 = 2,500 solve times for each part 1 and part 2. For simplicity, we will pretend we forgot about the fact that part 2 for day 25 is a freebie. And we will lump all the days together even though difficulty level varies for each day. What we want is to see if there's any difference in overall distribution of solve times.

There is certainly a difference. The year 2024 is clearly quite distinctive on the plot above. The interquartile range (IQR; between 25th and 75th percentile) of 2024 solve times for part 1 is visually lower than the IQR of any other year.

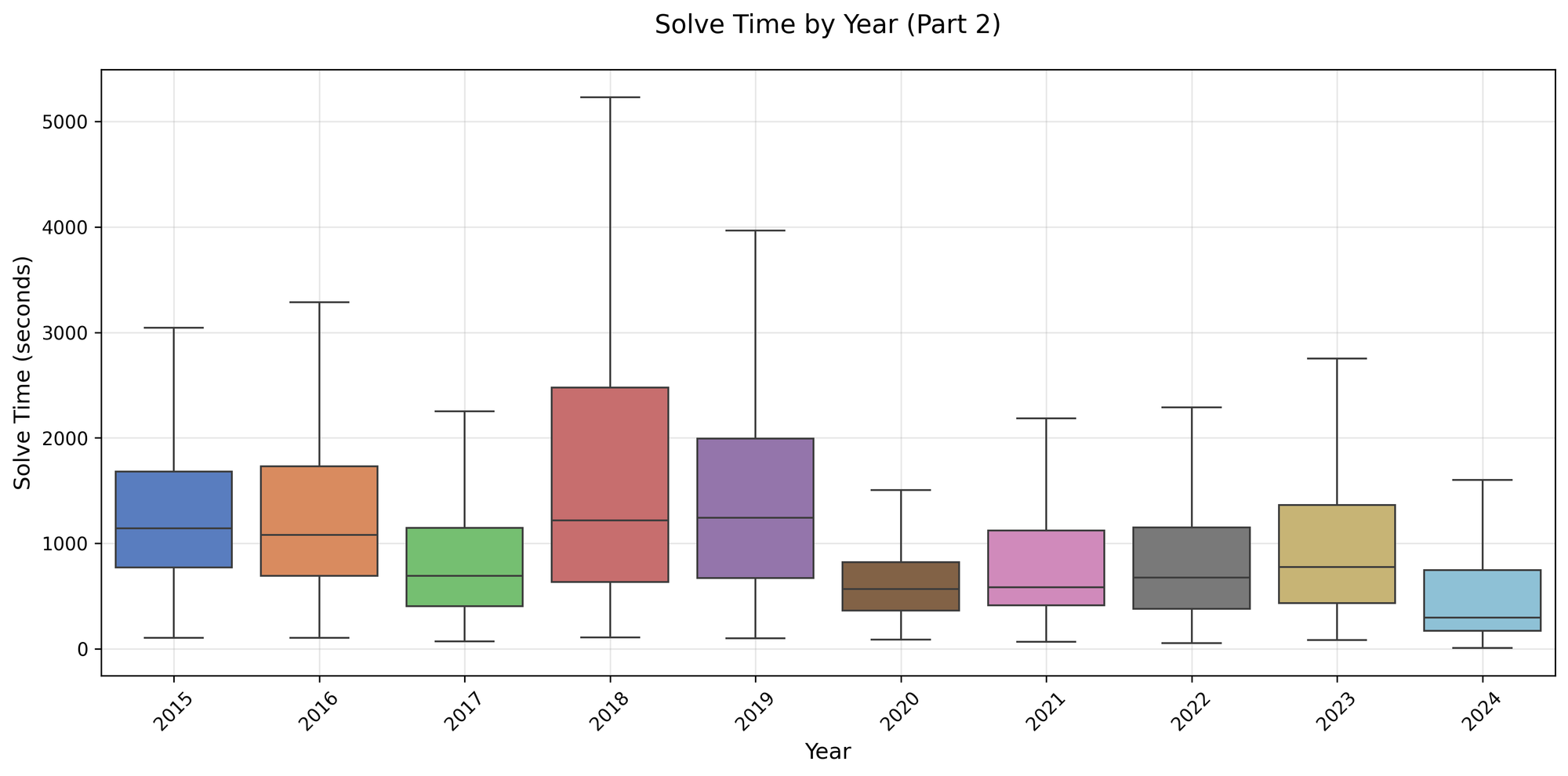

That was part 1, which is typically the easier of the two parts. We can also plot the solve time for part 2. Note, part 2 solve time actually includes part 1 solve time. So the more accurate name for "part 2 solve time" is "the time it took to solve both part 1 and part 2 (for the top 100 coders that solved both parts)".

For the part 2 solve time, 2024 saw a decrease again, but its distribution is not as visibly different as the part 1 solve time distribution.

One might interpret this as LLM not being omnipotent (yay?): part 2 puzzles often involve added complexities, scaling considerations, and algorithmic optimizations. What works in part 1 doesn't always work in part 2.

How Different are the Solve Time Distributions

Comparing solve time year-by-year is tricky, however. It's well appreciated that some years feature tougher questions than others. So we shouldn't expect the solve time distributions to be the same each year. We can measure the difference though, and get an idea if 2024 is "reasonably different", or "exceptionally different".

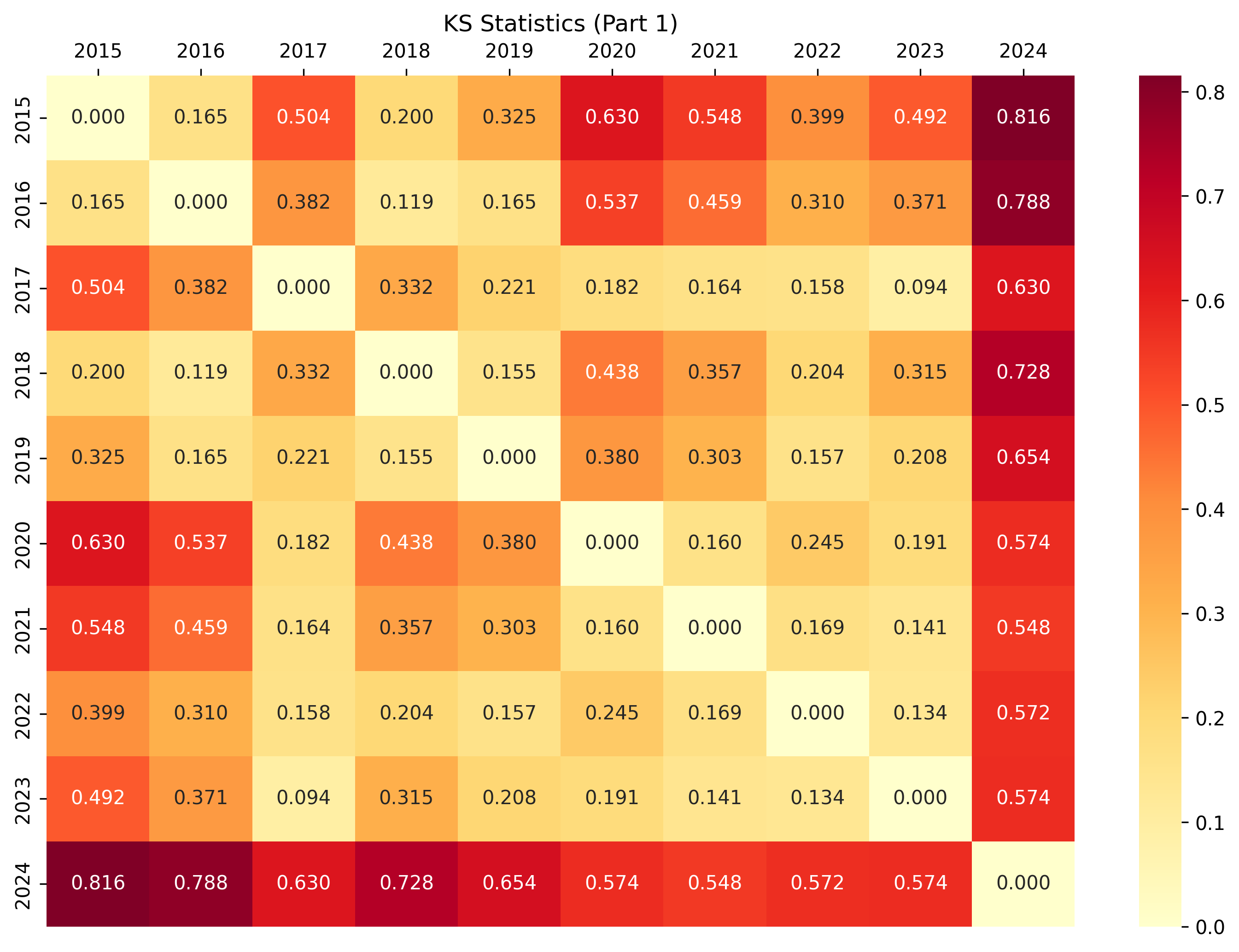

To quantify these differences, we conducted Kolmogorov-Smirnov (KS) tests between each pair of years. Between each pair of years, we get two values: a 0-to-1 distance measure between the two distributions (0: the distributions are identical; 1: they're completely different), and the familiar p-value to indicate if the difference is statistically significant. For all the pairwise analysis, p-value was extremely small so we will just look at the distribution difference.

With all the KS stats, we can visualize the result with a heat map.

The heat map reaffirms what we saw in the earlier distribution plots – 2024 is indeed quite different than previous years.

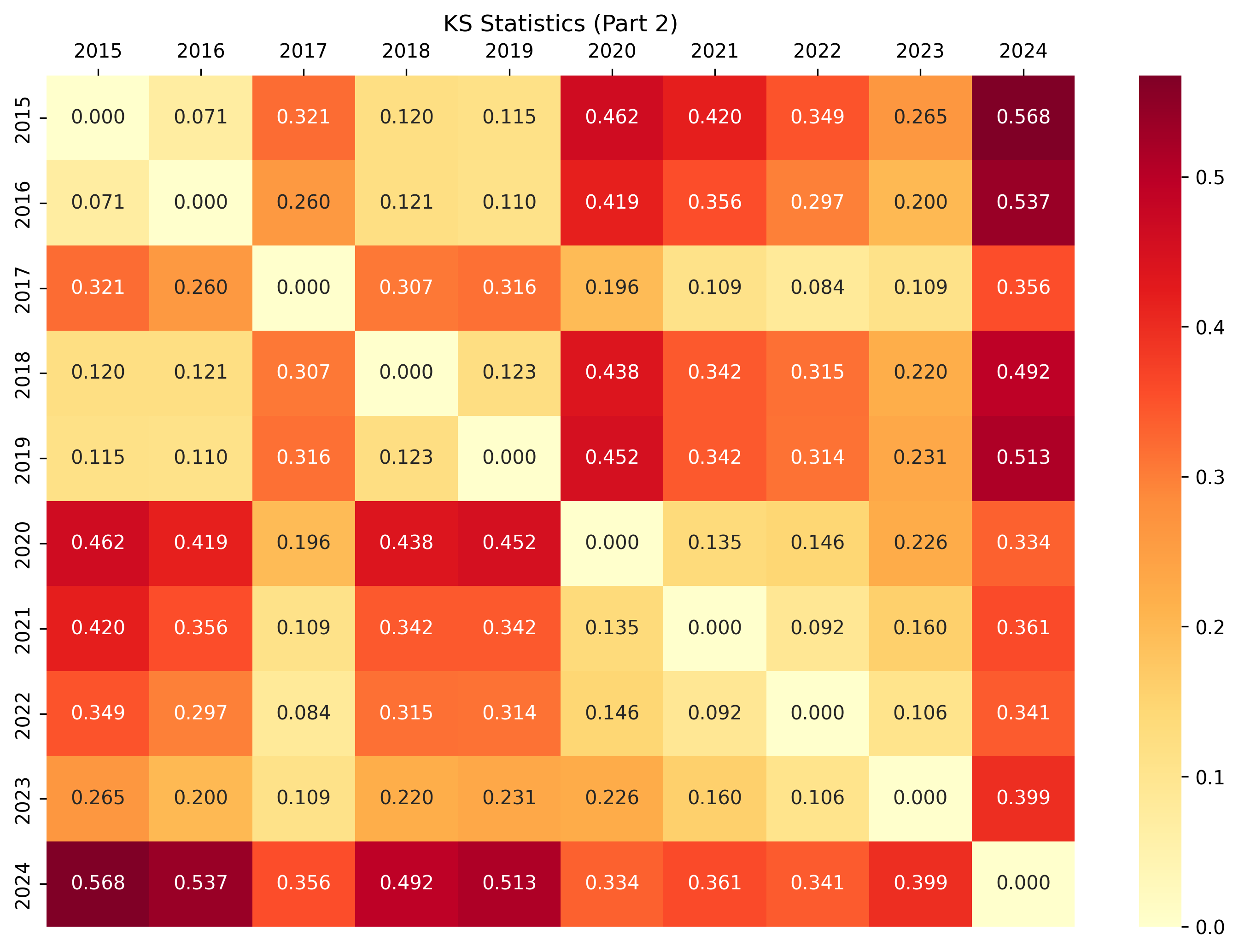

We again replicate this analysis for part 2. And, again, we saw that 2024 is different, but not as prominent as for part 1.

Coding in the Era of AI

The analysis here probably just corroborates what many Advent of Code enthusiasts already suspect: a meaningful portion of the leaderboard entries had AI play assist. Or, in the case of extreme fast solve times like 4 seconds, maybe more than assist.

So when AI can code meaningfully well, even getting onto competitive leaderboards, what does that mean for the human coders?

I, for one, feel optimistic for several reasons.

First, as we saw in the analysis, while LLM can be extremely good at the easier part 1 puzzles, its effectiveness is reduced for part 2. For genuinely difficult and novel problems, for which there's limited training data that the model had already seen, humans are indispensable. In this regard, LLM is a lot like a calculator. It's performing tasks that previously only humans can do. And thanks to calculators, we don't have to do the arithmetic anymore, and instead can focus on bigger tasks like building statistical models or planning business finances. When a new, powerful tool becomes available to humans, historically us humans abstract up: we let the new tool do the mundane, while we move up to solve the "bigger picture" problems.

Second, for people that enjoy solving coding puzzles, the capability of AI shouldn't take that joy away. Over the years, as computers began to win games such as chess and go, people did not stop playing these games. In fact, games like Sudoku and Minesweeper have been popular for years, even though it's always been the case that they can be easily solved by computers.

Lastly, many developers use Advent of Code to learn. And partnering up with AI accelerates learning. It's certainly a lot faster to get newbie questions answered with AI than digging through documentations. The infinite patience and endless encouragement of AI make it a great learning companion. This could be especially helpful for developers using Advent of Code to pick up a new programming language.

What will the 2025 Advent of Code look like? I am looking forward!