The biggest AI news in the last few days was the release of the reasoning model DeepSeek R1. Not only was DeepSeek R1 benchmarked to perform remarkably well compared to OpenAI's o1 model, it is open weight. This means that curious developers are free to try them out, say, via HuggingFace or Ollama.

There are many reasons to be excited about DeepSeek R1. First, this is an open weight model that is about equally performant as leading closed source model. The economy of developing AI-enabled functionalities changes drastically when there's a free DIY frontier model you can plug in. Having control over the model weights also means you can safeguard the security of your AI application. In addition, you can extend the model with further fine tuning, or distill the weights down to a more manageable size.

Another reason the DeepSeek R1 model is causing a stir is the training cost. Reportedly, it took about $5 Million to produce DeepSeek R1. This is a small fraction of the $100 Million to $1 Billion price tags that's been thrown around for the current leading frontier models.

A third reason is science. DeepSeek R1 was trained through reinforcement learning, without the typical first step of supervised fine-tuning. This is like learning through trial and error alone, rather than from curated examples. The idea that reasoning is an emergent property that naturally develops is fascinating, and has many interesting implications for both AI development, and our understanding what is intelligence.

Ok enough talking; let's give it a spin! And trying out a new model is only scientific if we are also measuring something. So let's see how well DeepSeek R1 can answer Jeopardy questions!

Benchmark Dataset

We are using the Jeopardy questions dataset from Kaggle. There are more than 200,000 questions – so we randomly picked 250 questions (that do not involve images or movies).

HuggingFace

Even though DeepSeek R1 models are open weight, I don't trust my hardware to be able to handle them too well. So first I tried to leverage HuggingFace. There are several distilled smaller models available through HuggingFace. Since DeepSeek-R1-Distill-Qwen-32B was measured to outperforms OpenAI-o1-mini in benchmarking, we will use the 32B model.

Using HuggingFace to call a foundational model turned out to be pleasantly straightforward. All you need is:

- Obtain an API key through your HuggingFace account

- Install the

huggingface-hubpython library. Ever since I trieduva few weeks ago, I've been a fan. So it's a quickuv pip install huggingface-hub.

We construct a simple script to iterate through the 250 Jeopardy questions and ask DeepSeek R1 (32B) to provide an answer. We use only the user prompt, as recommended in DeepSeek documentation.

import os

import time

from huggingface_hub import InferenceClient

import pandas as pd

df = pd.read_csv("jeopardy_samples.csv")

client = InferenceClient(

api_key=os.getenv("HF_API_KEY")

)

def get_messages(question):

messages = [

{

"role": "user",

"content": (f"""\

You are a Jeopardy game contestant. \

You are extremely knowledgeable about Jeopardy trivia. \

You will be give a Jeopardy question. \

You should then provide the answer to the question. \

Provide just the answer, no other text. \

You don't have to structure the answer as a question - just provide the answer. \

Question: {question}

""")

}

]

return messages

questions = df[" Question"]

answers_correct = df[" Answer"]

answers_full = []

answers_short = []

for question in questions:

messages = get_messages(question)

completion = client.chat.completions.create(

model="deepseek-ai/DeepSeek-R1-Distill-Qwen-32B",

messages=messages,

)

answer_full = completion.choices[0].message.content

answers_full.append(answer_full)

# Short answer is the very last sentence of the full answer.

answer_short = answer_full.split("\n")[-1]

answers_short.append(answer_short)

# Be nice.

time.sleep(2)

# Create a new df to save the results.

df = pd.DataFrame()

df["Question"] = questions

df["Answer_R1_full"] = answers_full

df["Answer_R1_short"] = answers_short

df["Answer_correct"] = answers_correct

df.to_csv("jeopardy_results_R1_32B.csv", index=False)

This works wonderfully for a few questions, but soon, we encountered model unavailable error:

raise _format(HfHubHTTPError, str(e), response) from e

huggingface_hub.errors.HfHubHTTPError: 500 Server Error: Internal Server Error for url: https://api-inference.huggingface.co/models/deepseek-ai/DeepSeek-R1-Distill-Qwen-32B/v1/chat/completions (Request ID: RqAZMr)

Model too busy, unable to get response in less than 60 second(s)We added retries and backoffs. But the model unavailable error was pretty consistent. So let's try a different approach.

Google Colab + Ollama

I had a quick detour on the DeepSeek platform, which offers API endpoints like OpenAI, interestingly using the OpenAI SDK. But I couldn't make successful payment somehow. This should be something that will get fixed soon. Even though you can host your own DeepSeek R1 models, making inference performant is non-trivial. And DeepSeek's pricing is currently $2.19 per 1 Million token (output), compared to OpenAI o1's $60 per 1 Million token. That's really appealing.

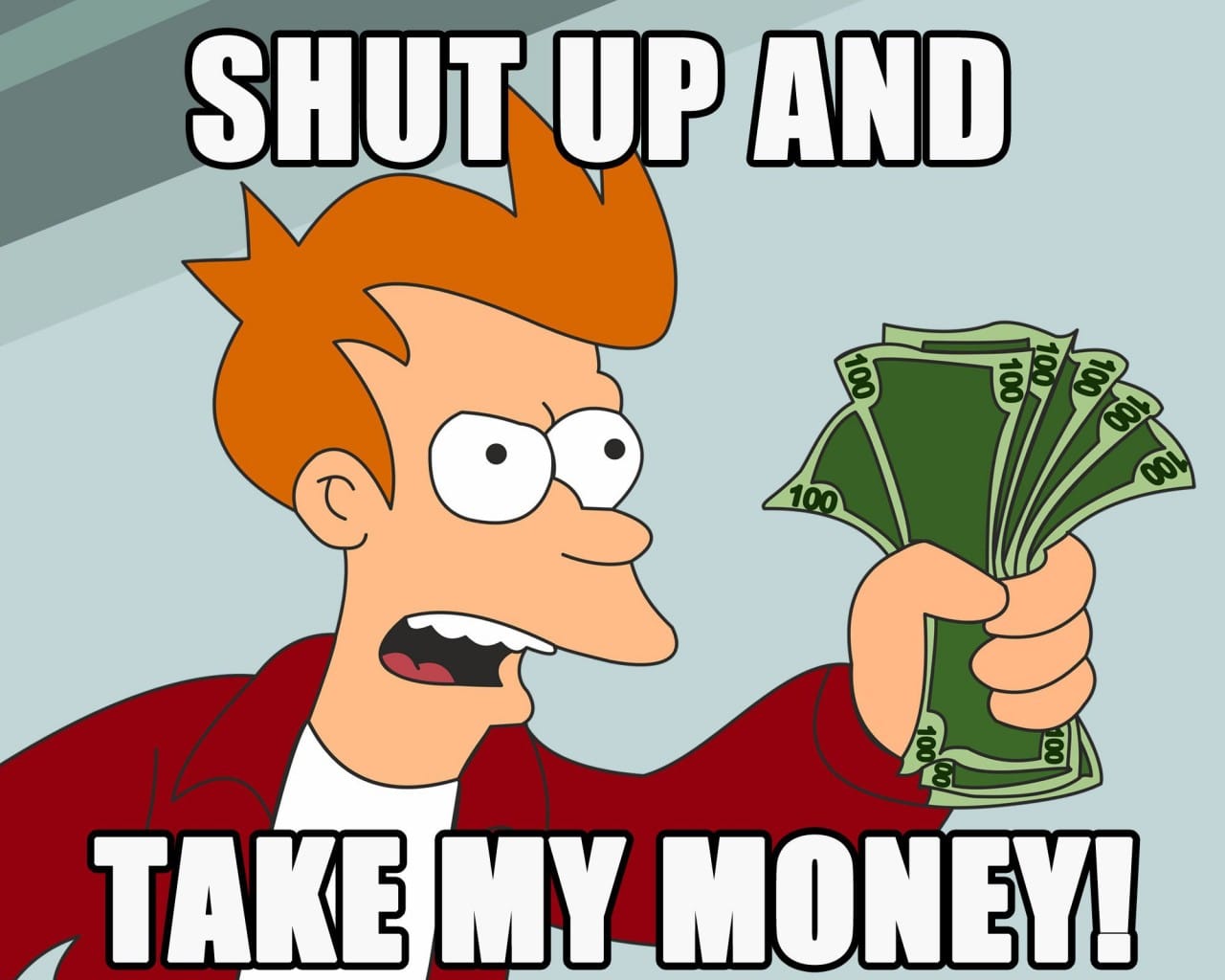

So I took another approach: Ollama to download the model, with Google Colab for the computing so I don't have to rely on my own hardware.

Google also has a line of open weight models called Gemma, and there's a nice example of how to run Gemma models through Ollama with Colab. Gemma is 2B parameters and 5GB, whereas DeepSeek-R1-Distill-Qwen-32B is 20GB, and the T4 GPU available for free tier just wasn't cutting it. So I am using A100 GPU.

Here is how to set up DeepSeek R1 in Google Colab.

!curl -fsSL https://ollama.com/install.sh | sh

!pip install -q ollama

!nohup ollama serve > ollama.log &

import ollama

MODEL_ID = 'deepseek-r1:32b'

ollama.pull(MODEL_ID)

import pandas as pd

df = pd.read_csv("jeopardy_samples.csv")

questions = df[" Question"]

answers_correct = df[" Answer"]

answers_full = []

answers_short = []

for question in questions:

response = ollama.chat(

model=MODEL_ID,

messages=[{

"role": "user",

"content": (f"""\

You are a Jeopardy game contestant. \

You are extremely knowledgeable about Jeopardy trivia. \

You will be give a Jeopardy question. \

You should then provide the answer to the question. \

Provide just the answer, no other text. \

You don't have to structure the answer as a question - just provide the answer. \

Question: {question}

""")

}]

)

answer_full = response.message.content.split("\n")

answers_full.append(answer_full)

res = [r.strip() for r in answer_full if r.strip()]

answer_short = res[-1]

answers_short.append(answer_short)

# Create a new df to save the results.

df = pd.DataFrame()

df["Question"] = questions

df["Answer_R1_full"] = answers_full

df["Answer_R1_short"] = answers_short

df["Answer_correct"] = answers_correct

df.to_csv("jeopardy_results_R1_32B.csv", index=False)

With this approach, we were able to iterate through all 250 questions, although it took some time. Watching DeepSeek reason is quite interesting. For example:

First, I'll list the numbers given: 63, 48, 33, 18. Hmm, let's see if there's a pattern here. Maybe it's an arithmetic sequence where each term decreases by a constant difference. Let me check the differences between consecutive terms.

63 to 48: That's a decrease of 15 (63 - 48 = 15). Next, 48 to 33: Again, a decrease of 15 (48 - 33 = 15). Then, 33 to 18: Still decreasing by 15 (33 - 18 = 15). So far, each time the number goes down by 15. If this pattern continues, the next term should be 18 minus 15, which is 3.

{some more rumination later...}

Answer: 3

Comparing with o1-mini

According to benchmark, the 32B distilled DeepSeek R1 can outperform OpenAI-o1-mini for many tasks. So we are doing the exact same Jeopardy test with o1-mini. We use identical user prompt, with no other parameters.

import os

import time

from openai import OpenAI

import pandas as pd

df = pd.read_csv("jeopardy_samples.csv")

client = OpenAI(api_key=os.environ.get("OPENAI_API_KEY"))

questions = df[" Question"]

answers_correct = df[" Answer"]

answers_full = []

answers_short = []

for question in questions:

completion = client.chat.completions.create(

model="o1-mini",

messages=[

# {"role": "system", "content": "You are a helpful assistant."},

{

"role": "user",

"content": (f"""\

You are a Jeopardy game contestant. \

You are extremely knowledgeable about Jeopardy trivia. \

You will be give a Jeopardy question. \

You should then provide the answer to the question. \

Provide just the answer, no other text. \

You don't have to structure the answer as a question - just provide the answer. \

Question: {question}

""")

}

]

)

answer_full = completion.choices[0].message.content

answers_full.append(answer_full)

answer_short = answer_full.split("\n")[-1]

answers_short.append(answer_short)

# Avoid rate limiting.

time.sleep(1)

# Create a new df to save the results.

df = pd.DataFrame()

df["Question"] = questions

df["Answer_full"] = answers_full

df["Answer_short"] = answers_short

df["Answer_correct"] = answers_correct

df.to_csv("jeopardy_results_o1_mini.csv", index=False)

Benchmark Results

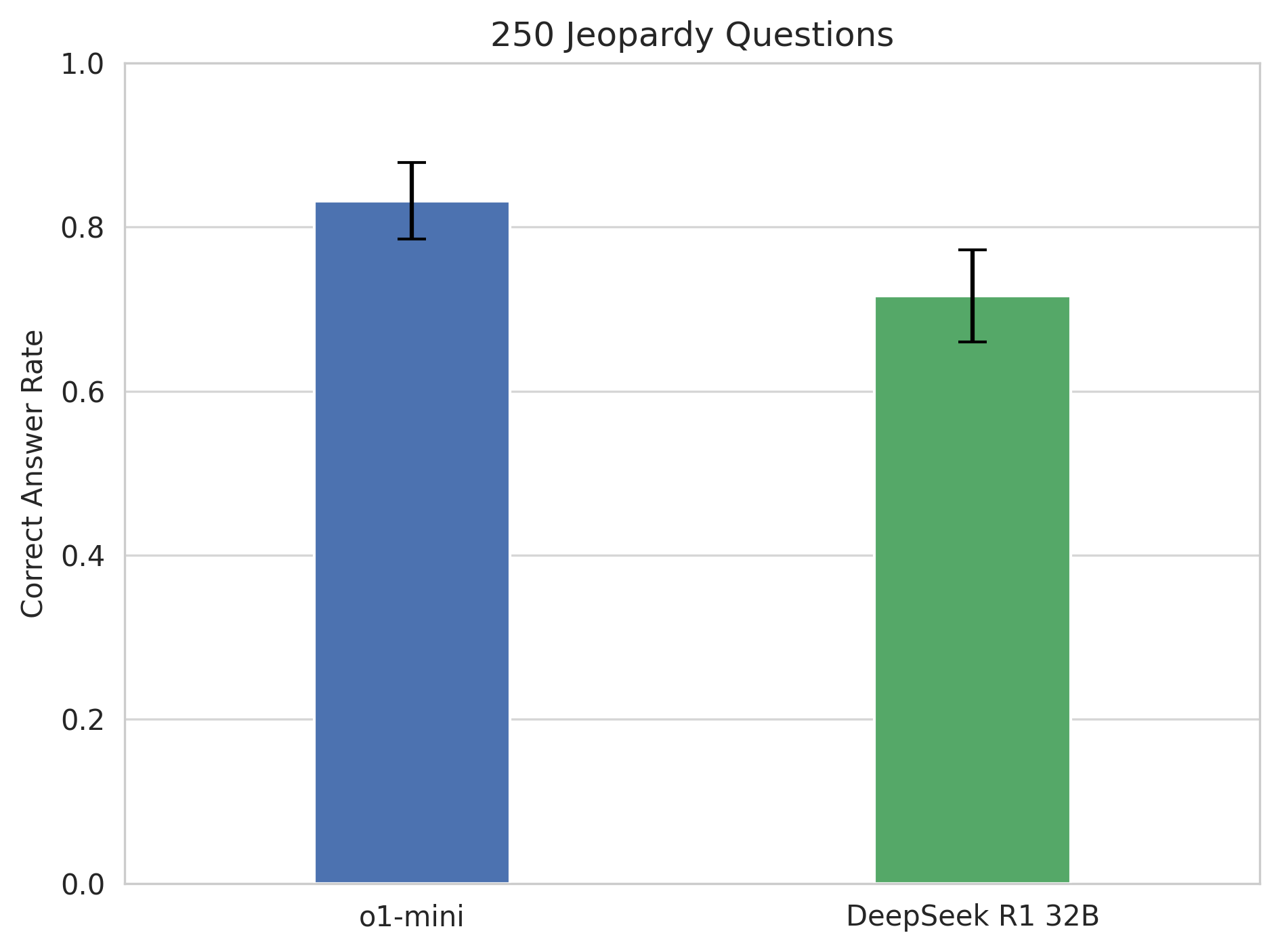

So how does DeepSeek R1 (32B) compare with o1-mini for the 250 Jeopardy question? Out of 250 questions, o1-mini got 208 correct, while DeepSeek R1 32B got 179.

There are mistakes that really didn't feel like it was the robots' fault. Here is an example:

This is a tricky question. The sequel is Iron Man 2, but since it's phrased as "in this movie's sequel", the correct answer is Iron Man. Both o1-mini and DeepSeek R1 32B got it wrong by answering Iron Man 2.

DeepSeek R1 70B

We can also push model performance higher by using a bigger model, say, deepseek-r1:70b. In comparison, o1-mini is about 100B parameters. With the bigger model (43GB), inference is a lot slower, taking nearly one minute for each question. This is after setting temperature to 0.6 as recommended.

But even without completing all 250 questions, 70B is already looking much stronger. For the first 80 questions:

- o1-mini: 70 correct

- DeepSeek R1 32B: 56 correct

- DeepSeek R1 70B: 70 correct

DeepSeek R1 70B is neck-and-neck with o1-mini! I'll leave this to run overnight.

Next Day Update

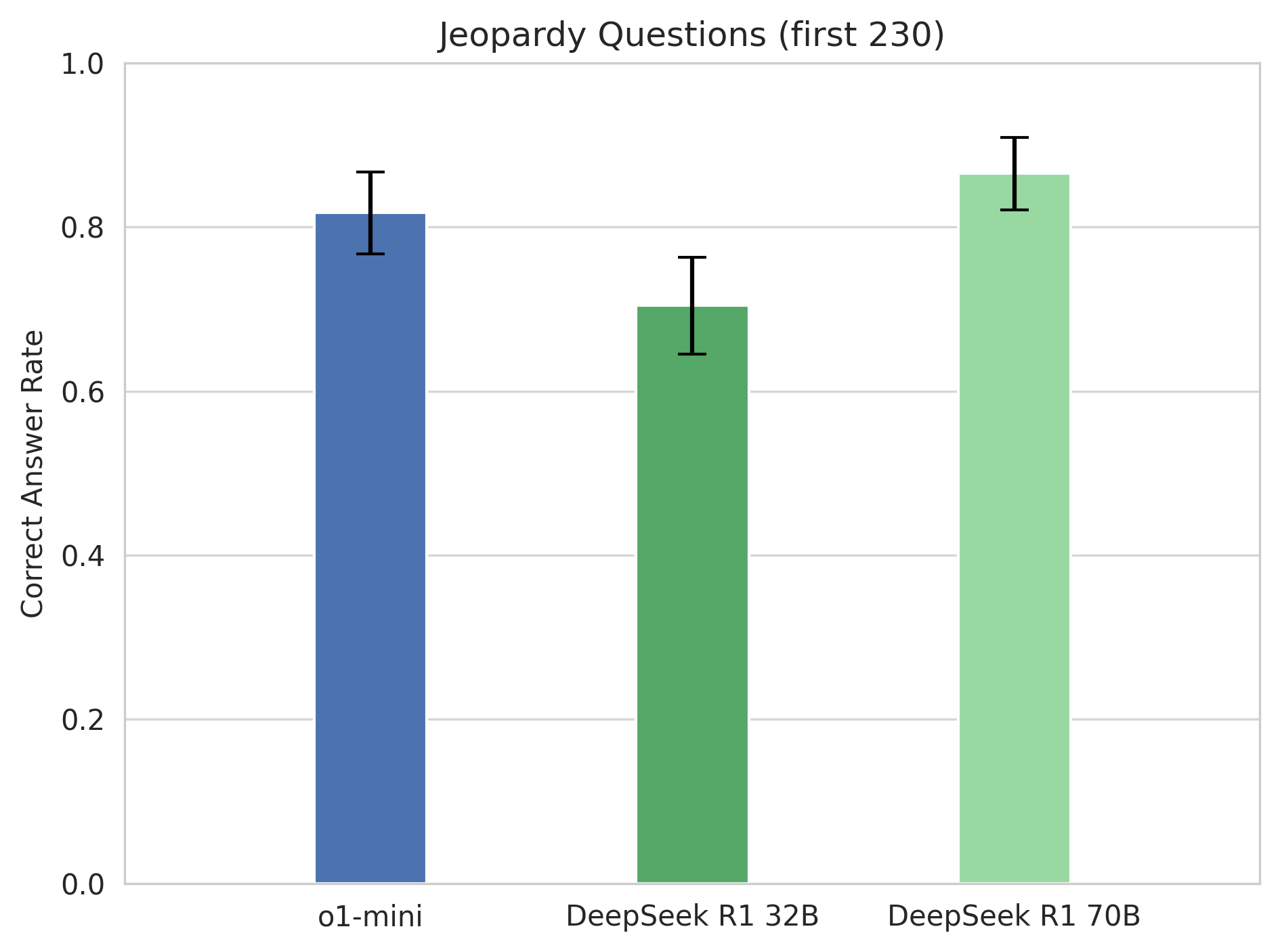

Leaving my Colab session running overnight, we got to 230 answers before it was disconnected. And out of the 230 questions:

- o1-mini: 188 correct

- DeepSeek R1 32B: 162 correct

- DeepSeek R1 70B: 199 correct

All of them are much, much better at Jeopardy than myself. And I didn't even provide categories! From the Jeopardy benchmarking, the free and open DeepSeek R1 70B is just as performant. It's amazing to think that o1-mini was only released about 4 months ago, and now there's an open weight model that is on par. What will tomorrow bring?