At this point I have done the Jeopardy benchmark three times (1, 2, and 3). It all started when, back in January, the excitement of DeepSeek R1 release turned me into a weekend LLM model assessor. In addition, I am a big fan of AWS Bedrock. Using AWS Bedrock, we can easily evaluate several models in one go.

Last month, Anthropic released Claude 3.7, which quickly became the go-to model for coding assistant. I didn't get a chance to test it in my previous benchmark, so I'm eager to give it a try.

Another reason I want to do yet another round of benchmarking comes from AWS. Since the open-weight DeepSeek came out in January, AWS users have been eager to access DeepSeek through Bedrock. AWS quickly provided instructions on how to run DeepSeek models on Bedrock as custom model imports. Just a few days later, AWS added DeepSeek to their model catalog, which can be set up with Marketplace Deployment. I couldn't wait to try it, but getting the necessary quota for the SageMaker service took a few weeks. Eventually, I was able to run DeepSeek on AWS as marketplace deployment, and as described previously, it was really easy to set up.

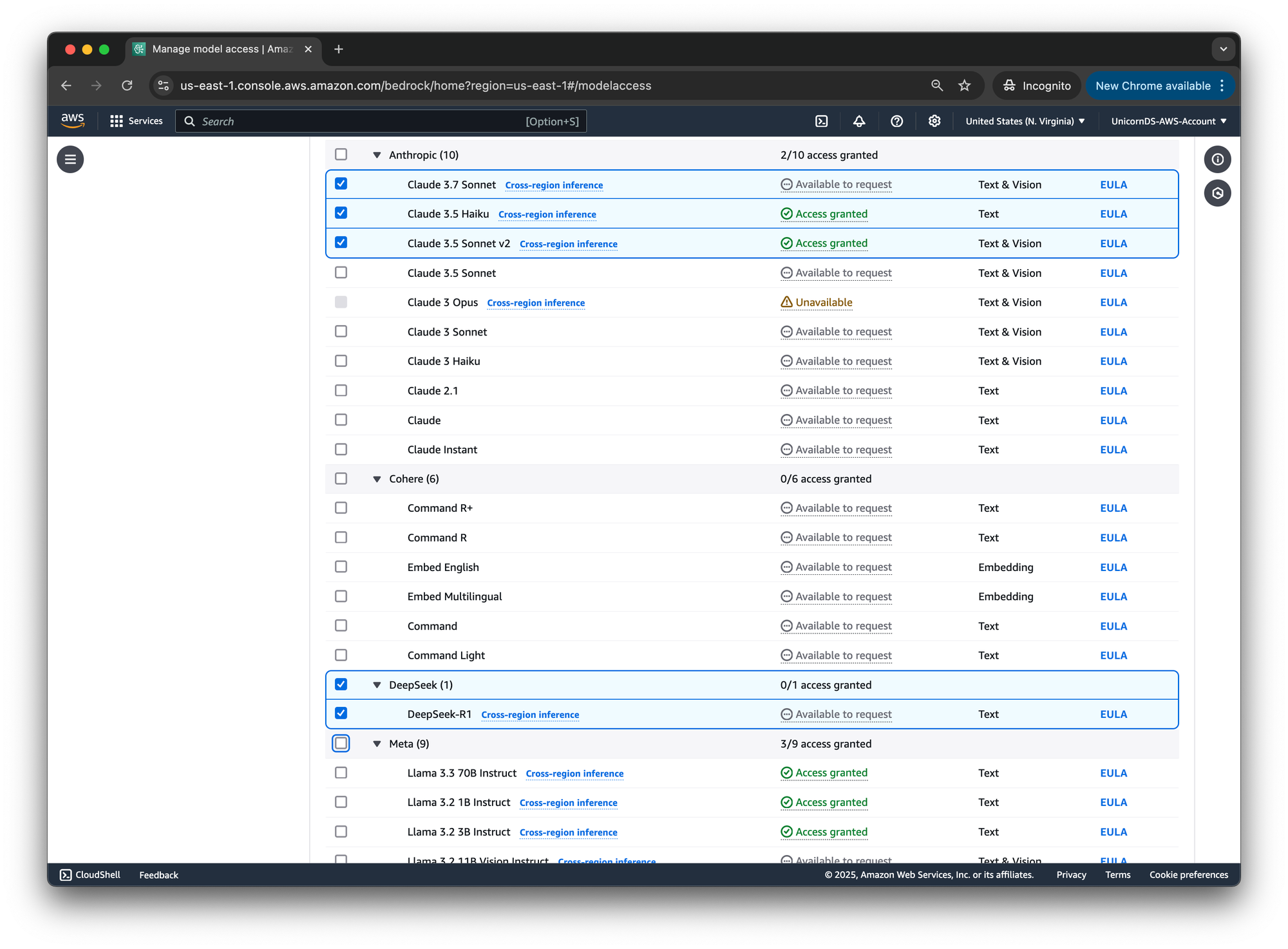

But it has become even easier. Bedrock has since added DeepSeek as a fully managed model. This means no need to perform my own deployment (and then needing to remember to turn off the instance after I'm done). Use exactly the same model invoke method, and just switch the model id.

So today let's add the two additional models to the benchmark! Together, here are all the models we can easily access through AWS Bedrock:

- DeepSeek R1 (released Jan. 2025; fully managed on Bedrock on Mar. 2025)

- Claude Sonnet 3.7 (released Feb. 2025)

- Claude Sonnet 3.5 v2 (released Oct. 2024)

- Claude Haiku 3.5 (released Oct. 2024)

- Llama 3.3 70B (released Dec. 2024)

- Llama 3.2 3B (released Sep. 2024)

- Llama 3.2 1B (released Sep. 2024)

- AWS Nova Pro (released Dec. 2024)

- AWS Nova Lite (released Dec. 2024)

- AWS Nova Micro (released Dec. 2024)

Bedrock Model Catalog

How easy is it to use DeepSeek on Bedrock now that it's fully managed? As simple as requesting access to the model. Almost instantaneously you can get access and start calling the model.

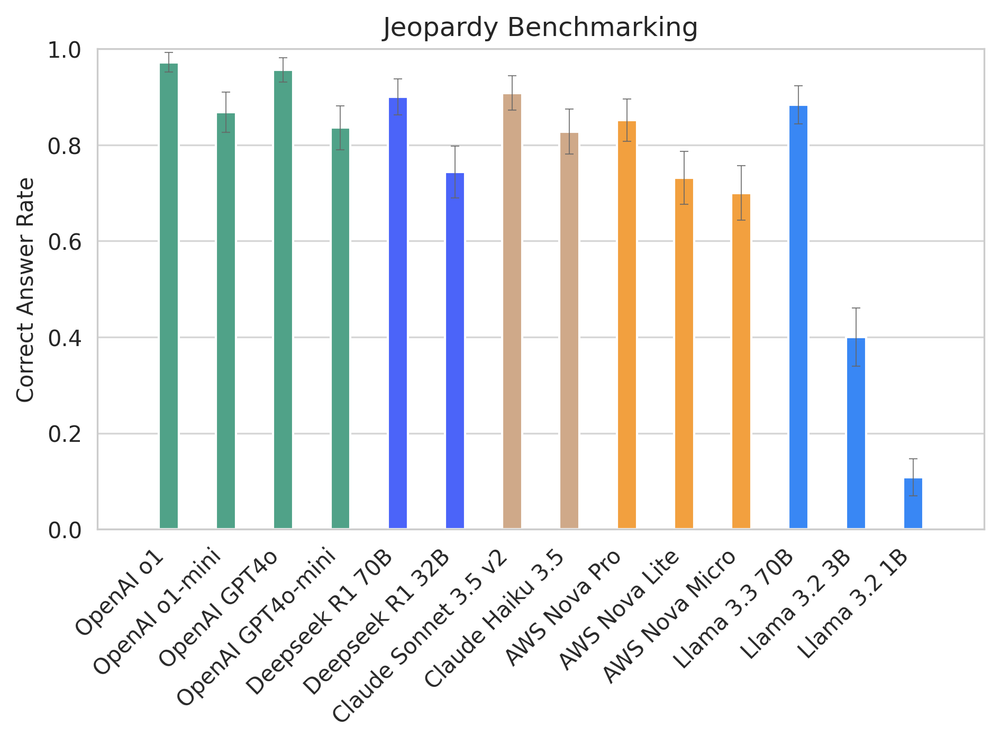

Result

In this 4th round of Jeopardy Benchmarking, we now have 16 models, 12 of which we accessed through AWS Bedrock. Besides the two DeepSeek R1 Distilled models (32B and 70B), for which we used marketplace deployment, we tested 10 different models from 4 different providers (DeepSeek, Anthropic, Meta, and AWS) with exactly the same boto3 code, changing only model ID. If you enjoy model shopping, AWS Bedrock will make you very happy!

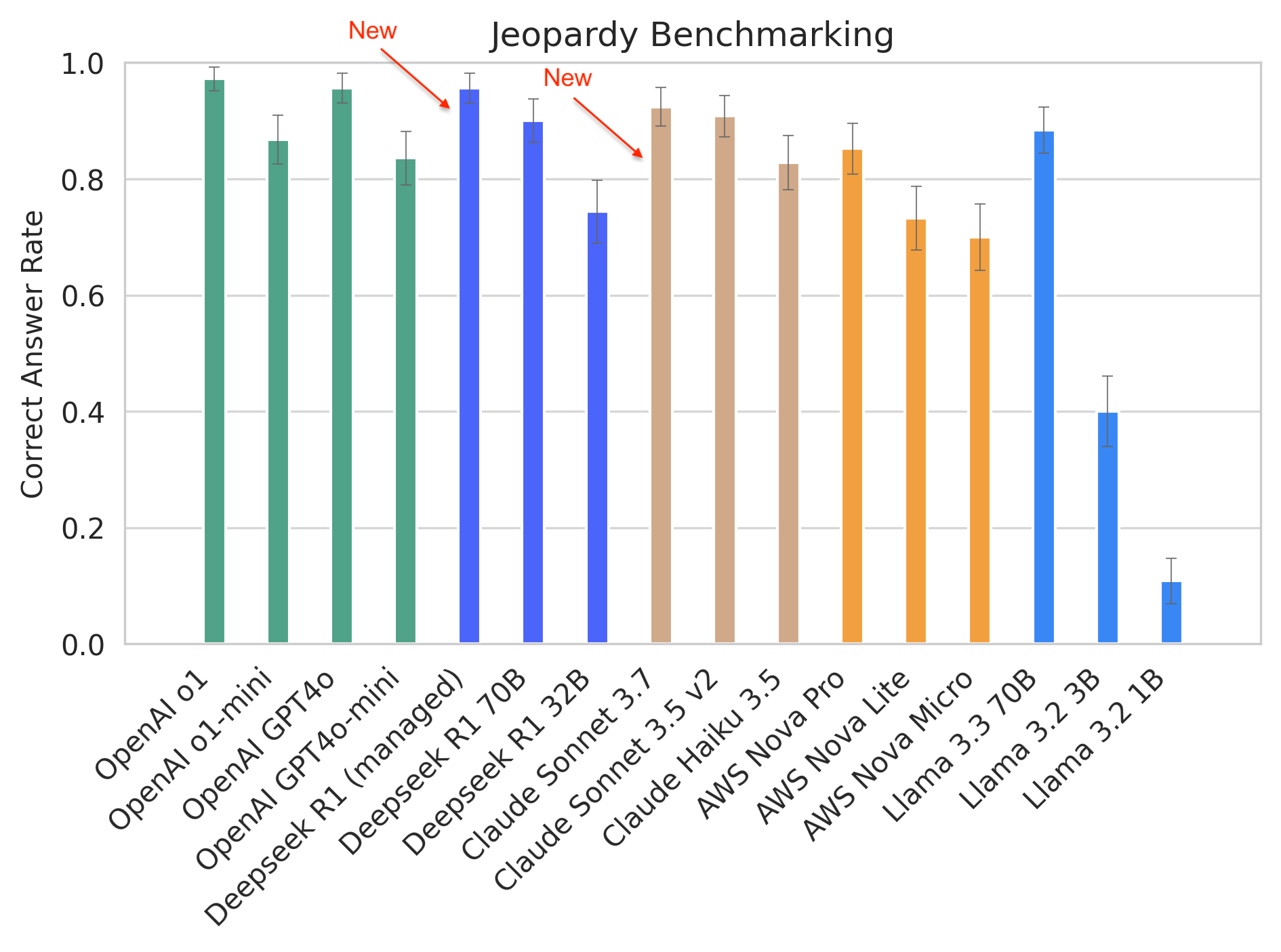

The performance of DeepSeek R1 is really good, as expected, on par with OpenAI o1 and 4o. Claude Sonnet 3.7 is slightly better than 3.5.

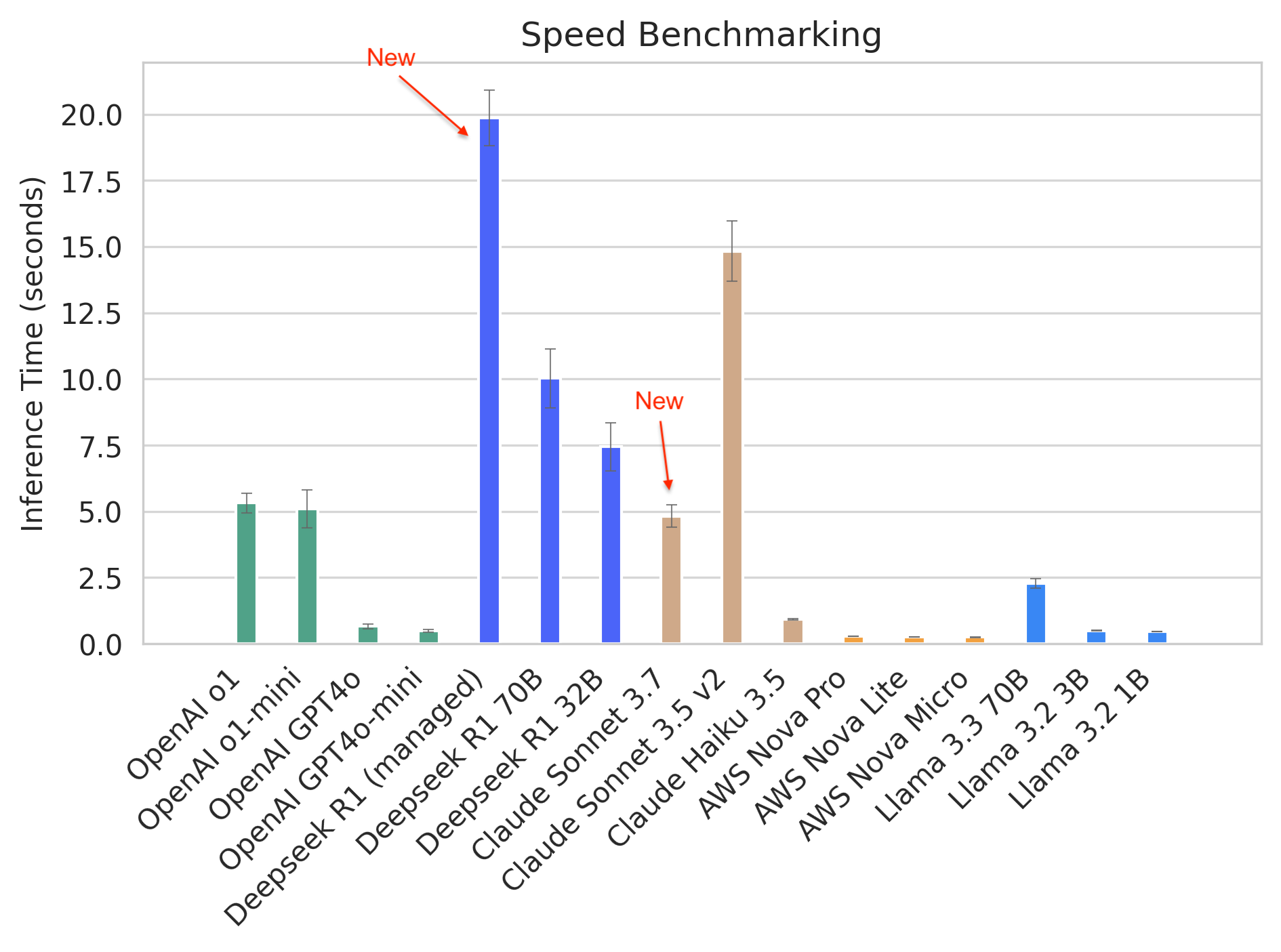

But besides performance, speed also matters. Although DeepSeek R1 performed impressively for answering Jeopardy questions, it's also the slowest we have observed so far, taking nearly 20 seconds on average to answer a question. Not going to fare too well if this were an actual Jeopardy game.

On the other hand, Claude 3.7 Sonnet is faster compared to what we saw for Claude 3.5 v2 when we benchmarked it a month ago. It should be noted though that we are calling these models on demand on Bedrock, so speed is likely affected by usage across the board. Deepseek R1 might be slow to respond because I'm just one of the many many LLM users calling it.

That wraps up our Jeopardy benchmarking. We will of course keep trying out new LLM models, but next time we will also experiment with other tasks and datasets, I promise!